The National Science Foundation (NSF) is pouring millions into research and the development of tools to combat online mis- and disinformation, according to a Daily Caller News Foundation review of agency grants.

Since the start of the Biden administration, the total NSF dollars spent on “misinformation” related projects have reached $38.8 million, according to a November report by the Foundation for Freedom Online (FFO). However, NSF has awarded even more grants to combat mis- and disinformation since the release of FFO’s report, the DCNF found. Most grants reviewed by the DCNF, which are ongoing and have past disbursement dates ranging from July 2019 to Dec. 2022, fall under the “Convergence Accelerator Track F: Trust & Authenticity in Communication Systems” or “Secure and Trustworthy Cyberspace (SaTC).”

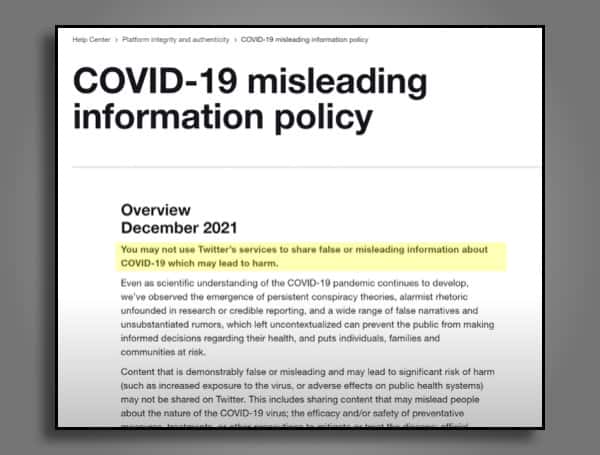

The disinformation industry is growing and increasingly used to inform social media platforms content moderation policies, which seem to disproportionately target conservative speech. Last week, the Daily Caller News Foundation reported that the U.S. State Department helped fund the “disinformation” monitor Global Disinformation Index (GDI), which the Washington Examiner earlier found maintains a blacklist of conservative media groups and websites rated based on disinformation risk that ad companies in turn utilize to defund.

In the news: Florida Death Row Inmate Scheduled To Be Executed Thursday, First State Execution Since 2019

Aaron Terr, Director of Public Advocacy at the Foundation for Individual Rights and Expression (FIRE), told the Daily Caller News Foundation it is “not a far-fetched scenario” to imagine the government using the technology it is funding through NSF to regulate speech.

“The government doesn’t violate the First Amendment simply by funding research, but it’s troubling when tax dollars are used to develop censorship technology,” said Terr. “If the government ultimately used this technology to regulate allegedly false online speech, or coerced digital platforms to use it for that purpose, that would violate the First Amendment. Given government officials’ persistent pressure on social media platforms to regulate misinformation, that’s not a far-fetched scenario.”

One of the most recent NSF awards, a $50,000 grant given to the University of Houston on Dec. 20, 2022, seeks to create a “social media misinformation interactive dashboard” that would “forecast trends and analysis to help address the misinformation endemic in America,” according to the grant abstract.

“Misinformation online may result in people questioning evidence-based medical guidance or refusing safe treatments,” the grant says. “Understanding current misinformation content and trends supports both corporate entities and social media users.”

In the news: Republican Party Of Florida Announces New Chairman And Party Officers

In August 2020, a $149,858 project was launched at the University of Illinois to examine “the ways that misinformation regarding COVID-19 disseminates through social media and news outlets,” the grant abstract says.

“The ultimate goal is to use machine learning and network analysis tools to provide insight into the locations where misinformation originates and understanding of the mechanisms through which this misinformation spreads,” the abstract says. In order to locate “potentially dubious” information, the researchers test information against “insights from the Centers for Disease Control.”

The estimated end date is July 31, 2023, and the long-term results could be “useful for fighting future pandemics as well as other topics that are vital to the health of the Nation,” according to the grant.

On July 26, 2019, Syracuse University was awarded a $495,478 grant to study the “online dynamics of misinformation,” focusing on how “misinformation becomes woven into narratives online, how technology influences this process, and how design might be used to alter it.”

In the news: Florida Man, Armed With Flare Gun And Dive Knife, Threatened Marina Employees As He Burglarized Boats

“Online misinformation can influence public health attitudes, potentially costing billions of dollars and numerous lives,” the abstract states. “This project will span a series of crowd-based experiments to investigate how people in online networks work together to combine misinformation to create and defend public health narratives.”

A late January report from FFFO highlights censorship tools created at various universities who received $750,000 grants through the NSF’s Convergence Accelerator Track F.

One project funded through a grant awarded on Sept. 20, 2021 to the University of Michigan, WiseDex, uses keywords to automatically flag posts with a “misleading claim” for social media company reviewers to compare against company Terms of Services.

Another project out of the University of Wisconsin system, “Course Correct,” aims to “help journalists” find and correct misinformation, along with tracking “the actual networks where the misinformation is doing the most damage.” The grant was awarded on Sept. 15, 2022.

Six projects have qualified for an additional $5 million grant as part of Phase 2: ARTT, Expert Voices Together, DART, Course Correct, Co-Insights, and Co-Designing for Trust.

An NSF spokesperson told the DCNF that the goal of the program is to “support increased citizen trust in information.”

“The overarching goal of Track F is to help develop tools, techniques, educational materials and programs to support increased citizen trust in information by more effectively preventing, mitigating, and adapting to critical threats in our communications systems,” the spokesperson said. “These threats include, but are not limited to, fake social media accounts, online disinformation campaigns, online harassment, and other emerging misinformation narratives that stoke social conflict and distrust.”

In the news: Parents Accused Of Torturing Kids, Forcing Them Into ‘Igloo-Style Doghouse,’ Boy Feared Dead

The NSF is also funding projects through its Secure and Trustworthy Cyberspace (SaTC) program, which began inviting proposals in February 2022.

“Although information manipulated for political, ideological, or commercial gain is not new, the dissemination of inaccurate information at unprecedented speed and scale in the modern digital landscape is a new phenomenon with potential for vast harm,” the invitation letter stated. “There are many terms in use to characterize manipulated information, including misinformation, disinformation, and malinformation. By any of these names, wide-spread distortions of the truth serve to undermine public trust in critical democratic institutions and the validity of scientific evidence, and authentic communication.”

A collaborative SaTC research project between the University of Utah, New York University, and the Georgia Tech Research Corporation aims to develop “early warning and detection technique” that reduce the time “between misinformation generation and fact-check dissemination.” The institutions were awarded grants of $441,200, $396,000, and $428,000, respectively.

In one instance, the National Science Foundation granted $324,000 for an undergraduate summer program at Old Dominion university in “disinformation” research. The two-month program, which will take place during the summer of 2023, offers students a $6,000 stipend, a $600 travel subsidy, housing, and meals as they learn about the “the rapidly growing research area of disinformation detection and analytics,” according to its website.

“Disinformation that spreads on the Web and social media isn’t just a nuisance, it causes real harm,” the flyer says. “Participants will develop essential STEM skills for studying disinformation.”

In the news: Florida Highway Patrol Trooper Suffers Minor Injuries When Hit-And-Run Driver Smashes Into Cruiser

An NSF spokesperson said the project’s goal is to “enable people to identify fake online identities,” sharing a blog from one of the main researchers on identifying fake Twitter accounts.

“The purpose of this Research Experiences for Undergraduates (REU) Site project is to give students experience working with technology to protect Internet security, which is why NSF’s Secure and Trustworthy Cyberspace (SaTC) supported this award,” he stated.

The program was first hosted last year, when eight students were invited to conduct research for the summer on various topics, ranging from testing models that detect “COVID-related fake scientific news” to identifying fabricated tweets, according to an Old Dominion University news release.

One sample project listed on the website, “Spot bogus science stories and read news like a scientist,” instructs students that “public digital media can often mix factual information with fake scientific news.”

“In this project, students will investigate computational methods to automatically find evidence of scientific disinformation from a large collection of scientific publications,” the description reads. “The goal is to find an effective model to recommend the most relevant scientific articles that helps readers to better assess the credibility of scientific news, and locate the most relevant claims.”

The grant description adds that the knowledge students learn will prepare them for future “disinformation-related jobs.”

“Government attempts to suppress ‘misinformation’ are a serious threat to free speech,” Terr told the DCNF. “The government isn’t omniscient. It’s made up of people who, like everyone else, lack perfect access to the truth. Reality is messy, and human knowledge is constantly evolving. But that evolution can’t happen if we let the government have final say on what’s true or false. Nor should we trust the government to arbitrate truth in a fair and unbiased way. It will inevitably abuse that power to suppress criticism and dissent.”

Android Users, Click Here To Download The Free Press App And Never Miss A Story. Follow Us On Facebook Here Or Twitter Here. Signup for our free newsletter by clicking here.