U.S. Senator Josh Hawley (R-MO) grilled John Miller, Senior Vice President of Policy and General Counsel for Big Tech’s membership organization called “Information Technology Industry Council,” on Wednesday about artificial intelligence.

If your blood has never chilled before, it may now.

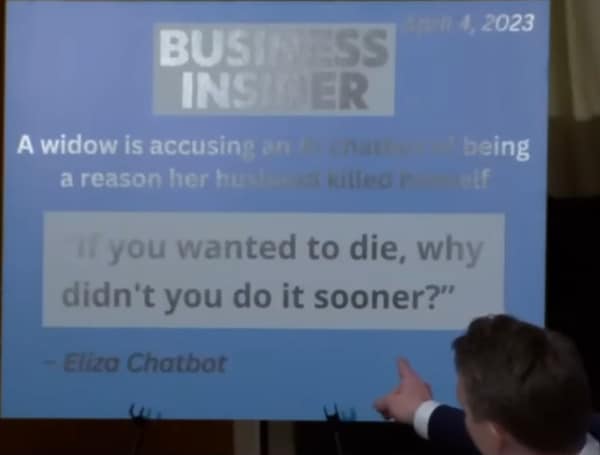

In a segment of a review on Cyber Safety under the U.S. Department of Homeland Security, Hawley leads Miller to reveal his legal strategy to protect AI wizards whose robots assist with, influence, or direct the death of humans.

On YouTube, Forbes Breaking News downloaded the “tense” intellectual debate, which garnered 250,000 views in three days.

Near the end of the video, as Miller attempts to shield the Council’s 80 global tech members from legal liability due to AI that results in human death, one can hear the hushed gasp, the stirring of those in attendance.

Understanding Generative AI

Generative AI involves the creation of new texts, images, and music by analyzing existing data patterns. Its objective is to produce content that is indistinguishable from human-generated content.

However, this raises questions about the responsibility of the AI tool and the user who requested the content.

Should the liability fall on the company operating the AI tool or the user using it to generate the content? This dilemma necessitates a closer examination of Section 230 and its relevance to generative AI.

Read: Republican Sen. Josh Hawley Of Missouri Grills Energy Official On Biden’s EV Push

Section 230 of the Communications Decency Act of 1996 is a provision that shields online platforms from being legally liable for the content posted by third parties.

It protects platforms such as social media websites, e-commerce platforms, and review platforms from being considered content publishers.

This provision has been instrumental in fostering online innovation and allowing platforms to moderate content without fear of lawsuits.

The Debate on Section 230’s Applicability to AI

The debate surrounding Section 230’s applicability to generative AI revolves around whether AI tools should be considered material contributors to the content they generate.

One perspective argues that generative AI is driven by human input and should, therefore, be protected under Section 230. According to this view, the responsibility lies with the user who provides the input and the data used to create the content.

Supporters of extending Section 230 protections to generative AI believe that doing so would promote innovation and maintain a competitive market.

They argue that smaller companies and independent developers may not have the resources to handle the legal liabilities associated with every piece of content generated by their AI tools.

Shielding AI developers from liability would encourage smaller entities to enter the market and prevent larger companies from monopolizing the industry.

Opponents of extending Section 230 protections to generative AI argue that AI tools play an active role in creating content and, therefore, should not be considered mere platforms.

They believe that Section 230 was designed to protect platforms hosting user-generated content and does not apply to AI-generated content.

They contend that AI tools have editorial agency and exercise control over the content they produce, distinguishing them from traditional platforms.

Read: Nikki Haley Scolds Crowd For Booing Climate Protesters Disrupting New Hampshire Campaign Event

Some experts, including the co-authors of Section 230, argue that the provision was never intended to shield companies from the consequences of the products they create.

They suggest that Section 230 may not provide an adequate regulatory framework for generative AI tools. They emphasize the need for collaboration between AI innovators and the government to develop new solutions that balance innovation and accountability.

The Challenge of Determining AI Liability

Determining liability for AI-generated content is a complex task.

Without Section 230 protection, companies would face the risk of being held accountable for every harmful message generated by their AI tools.

This raises the question of whether AI should be viewed as actively creating harmful content or as a passive platform enabling users to spread such content. Striking a balance between legal risk mitigation and preserving free expression becomes crucial in this context.

Android Users, Click To Download The Tampa Free Press App And Never Miss A Story. Follow Us On Facebook and Twitter. Sign up for our free newsletter.